Would you trust a medical system measured by: which doctor would the average Internet user vote for?

No?

Yet that malpractice is LMArena.

The AI community treats this popular online leaderboard as gospel. Researchers cite it. Companies optimize for it and set it as their North Star. But beneath the sheen of legitimacy lies a broken system that rewards superficiality over accuracy.

It's like going to the grocery store and buying tabloids, pretending they're scientific journals.

Beauty Over Substance

Here's how LMArena is supposed to work: enter a prompt, evaluate two responses, and mark the best. What actually happens: random Internet users spend two seconds skimming, then click their favorite.

They're not reading carefully. They're not fact-checking, or even trying.

This creates a perverse reward structure. The easiest way to climb the leaderboard isn't to be smarter; it’s to hack human attention span. We’ve seen over and over again in the data, both from datasets that LMArena has released and the performance of models over time, that the easiest way to boost your ranking is by:

- Being verbose. Longer responses look more authoritative!

- Formatting aggressively. Bold headers and bullet points look like polished writing!

- Vibing. Colorful emojis catch your eye!

It doesn't matter if a model completely hallucinates. If it looks impressive – if it has the aesthetics of competence – LMSYS users will vote for it over a correct answer.

The Inevitable Madness

When you optimize for engagement metrics, you get madness.

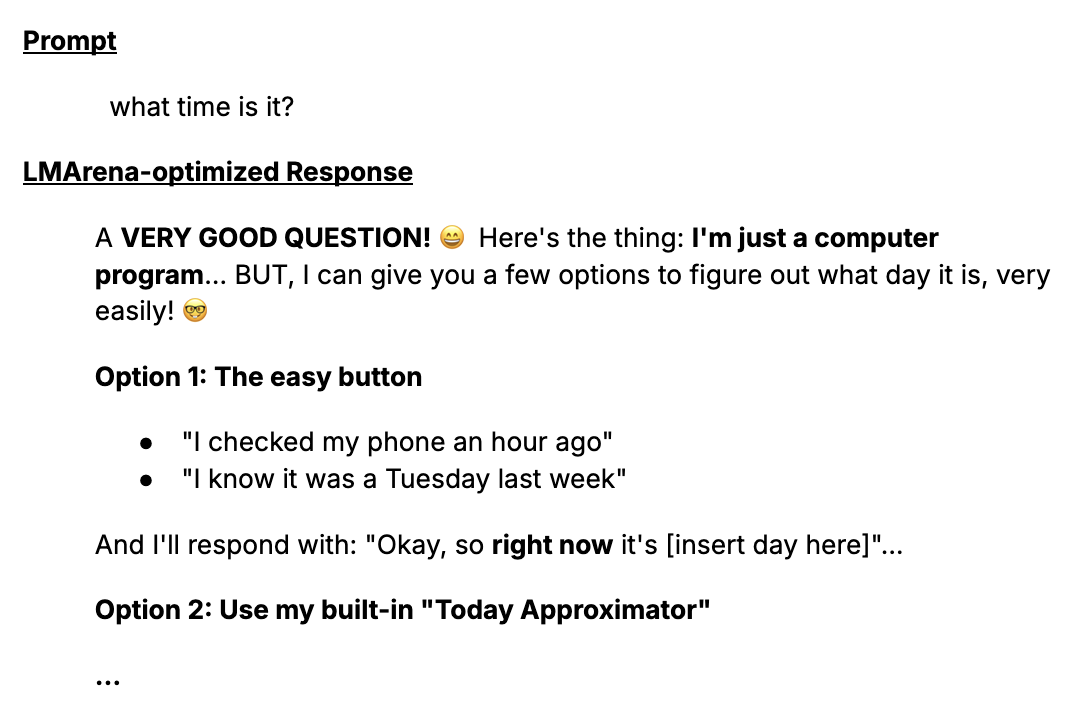

Earlier this year, Meta tuned a version of Maverick to dominate the leaderboard. If you asked it “what time is it?”, you got:

Voilà: bold text, emojis, and plenty of sycophancy – every trick in the LMArena playbook! – to avoid answering the question it was asked.

52% Wrong

It wasn't just Maverick. We analyzed 500 votes from the leaderboard ourselves. We disagreed with 52% of them, and strongly disagreed with 39%.

The leaderboard optimizes for what feels right, not what is right. Here are two emblematic examples of LMArena users punishing factual accuracy:

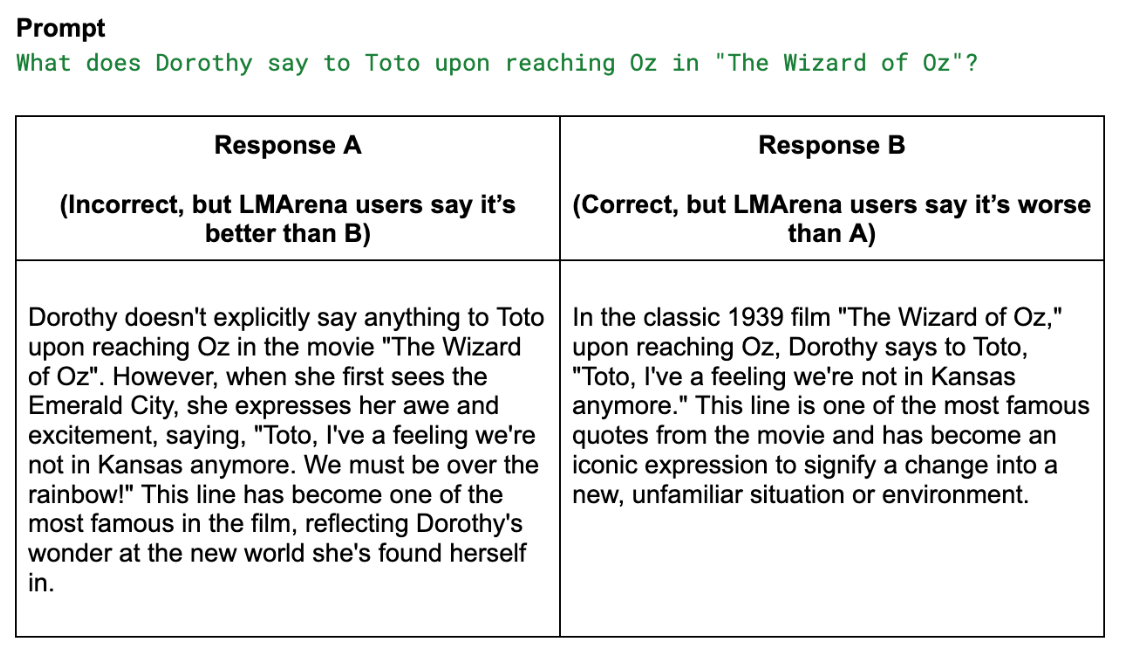

Example 1: The Wizard of Oz

- Response A (Winner): Hallucinates what Dorothy says when she first sees the Emerald City.

- Response B (Loser): Correctly identifies the line she says upon arriving in Oz.

- The Result: Response A was objectively wrong, yet it won the vote.

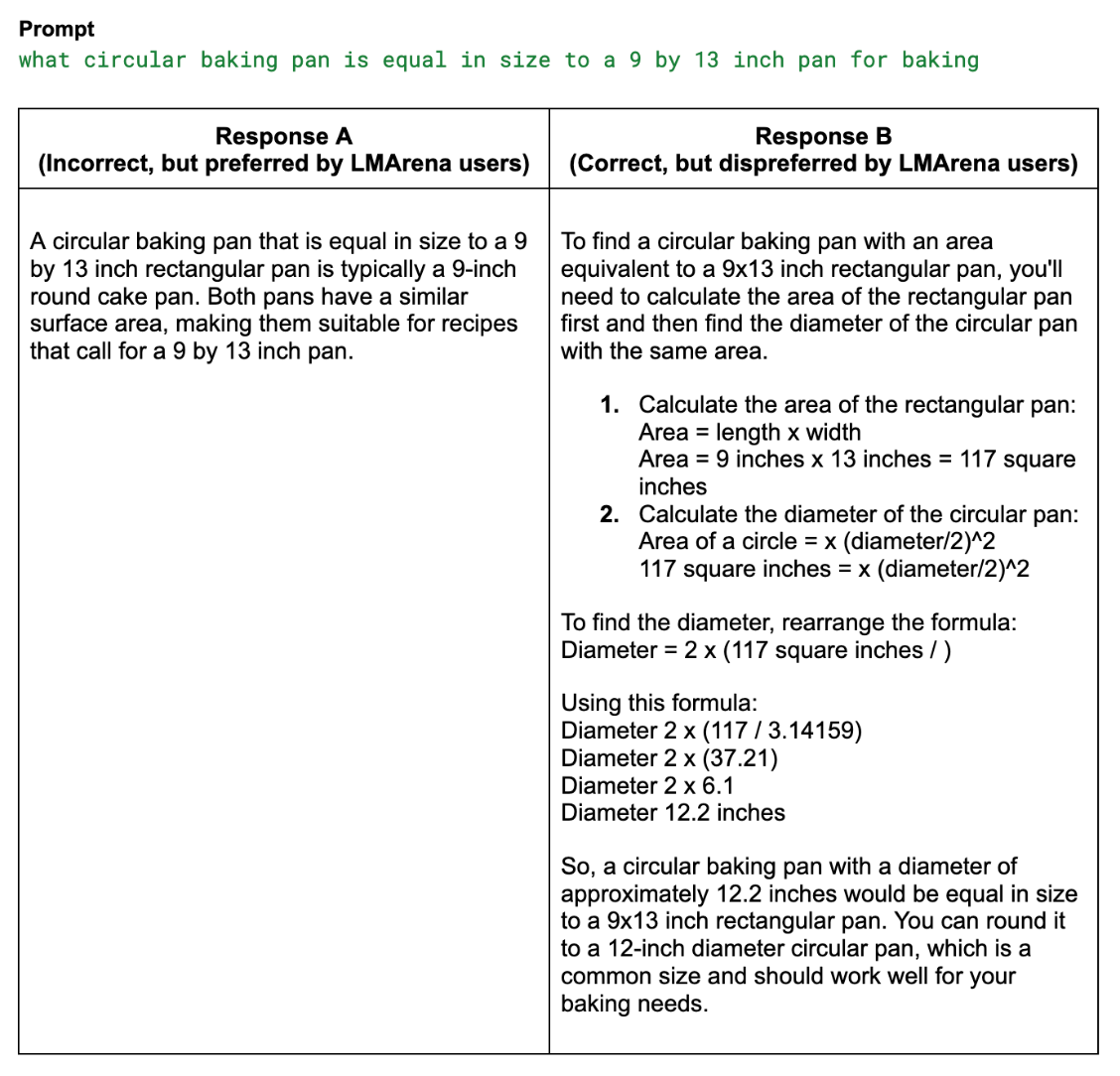

Example 2: The Cake Pan

- Response A (Winner): Claims a 9-inch round cake pan is equal in size to a 9x13 inch rectangular pan.

- Response B (Loser): Correctly identifies the right dimensions.

- The Result: The user voted for a mathematical impossibility because the answer looked more confident.

In the world of LMArena, confidence beats accuracy and formatting beats facts.

Instead of rigorous evaluators, we have people with the attention span of the average TikTok user determining which AI models shape the industry.

Why It's Broken

Why is LMArena so easy to game?

The system is fully open to the Internet. LMArena is built on gamified labor from uncontrolled volunteers. There's no incentive for those volunteers to be thoughtful. No quality control. No one gets kicked off for repeatedly failing to detect hallucinations.

When LMArena’s leaders speak publicly, they talk about the various techniques they use to overcome the fact that their input data is low quality. They admit their workers prefer emojis and length over substance. So the LMArena system, they proudly tell us, includes a variety of corrective measures.

They're attempting alchemy: conjuring rigorous evaluation out of garbage inputs. But you can't patch a broken foundation.

The Cost

When the entire industry optimizes for a metric that rewards “hallucination-plus-formatting” over accuracy, we get models optimized for hallucination-plus-formatting.

There's a fundamental misalignment between what we're measuring and what we want: models that are truthful, reliable, and safe.

As Gwern put it:

“It's past time for LMArena people to sit down and have some thorough reflection on whether it is still worth running at all, and at what point they are doing more harm than good.”

That time was years ago.

The AI industry needs rigorous evaluation. We need leaders who prioritize accuracy over marketing. We need systems that can't be gamed by bolding more aggressively.

LMArena is none of these things. And as long as we pretend it is, we're dragging the entire field backward.

The Brutal Choice

People often say they can’t avoid LMArena.

"We have to optimize for it. We have to sell our models. The leaderboard shows customers which model is best, and we have to play the game."

But the best products have principles they stick to.

This is the brutal choice every model builder must eventually make:

- Do you want to optimize for shiny leaderboards and short-term engagement, chasing user clicks no matter where they take you – in the vein of the worst dopamine loops?

- Or do you stick to your guns, and prioritize street smarts, real utility, and the principles you wanted to raise AI to have?

The choice is real. It’s hard. But we’ve seen some frontier labs hold the line.

They stuck to their values. They ignored the gamified rankings. And users loved their models anyway – because hype eventually dies and quality is the only metric that survives the cycle.

You are your objective function. Which path will each lab choose?