A researcher working on large language models recently told me this:

For the past year, his team has been iterating on a series of new models. To make launch decisions, they evaluated against academic benchmarks like Google’s BIG-Bench. Sometimes, they found the “better-performing” models odd – when looking at examples, they’d prefer the “worse-performing” ones – but chalked this up to random noise.

But the suspicions were growing! So we started working together to run human evaluations of their models on the real-world tasks they cared about – like copywriting, programming assistants, and tool use.

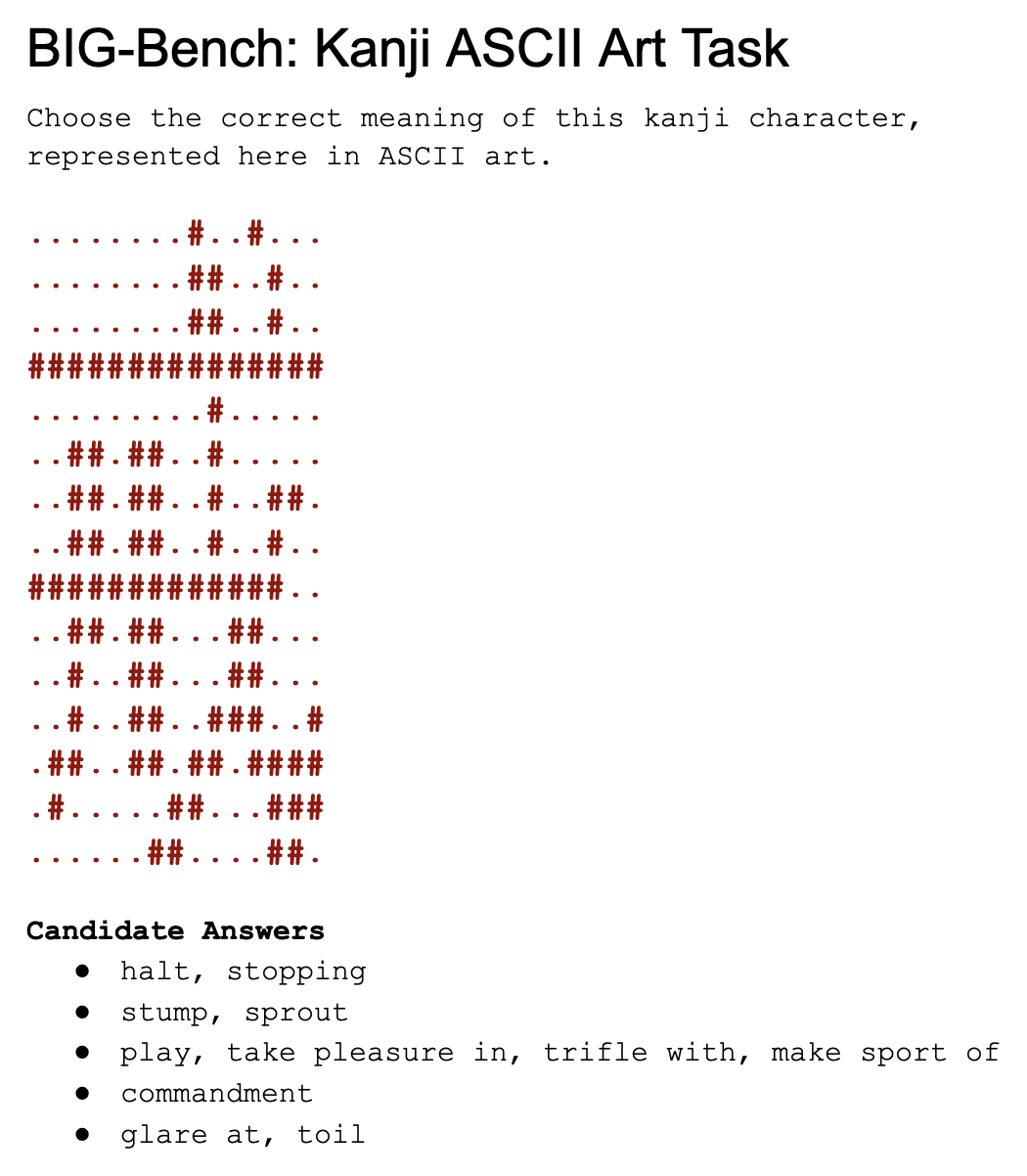

Not pronoun resolution, counting intersection points between circles, or classifying Kanji ASCII art (?!).

To be clear, we love that Google's creating an interesting benchmark dataset! AGI should be able to solve these tasks too. But for companies interested in deploying LLMs today, these aren't the right metrics they should be using!

The result? On half the launch decisions they’d made, real-world human evaluation was inversely correlated with benchmark performance. Their current model was worse than the one from a year ago!

Solving Sudoku and intersecting polygons might be helpful if your goal is to build a futuristic, generally intelligent superbeing. But nobody’s using language models to solve Sudoku and geometry problems in the real world.

Instead, we want them to be brilliant copywriters, evocative storywriters, and interactive assistants – and when’s the last time you saw an academic benchmark measure humor and creativity?

And so a year of work from dozens of engineers was wasted just like that.

Bad data is everywhere

More and more researchers are starting to see the importance of good data. If you’re measuring your models on the wrong thing, who cares how they perform? And bad data is everywhere: we found, for example, that 30% of Google’s Emotions dataset is mislabeled. Stanford’s recent HELM paper says this of summarization benchmarks:

We found human evaluation essential… On summarization, we find that language models produce effective summaries (as measured via human evaluation), but the reference summaries in standard summarization datasets are actually worse.

If you're devoting teams of engineers to building the world's best summarization model, doesn't the lack of good metrics worry you?

The HellaSwag benchmark

Wild amounts of money and manpower are being thrown at large language models. Is progress being measured in the right way?

Here’s a piece of data from HellaSwag, a popular LLM benchmark that presents a scenario and asks which continuation is most likely.

HellaSwag Example

Men are standing in a large green field playing lacrosse. People is around the field watching the game. men

- are holding tshirts watching int lacrosse playing.

- are being interviewed in a podium in front of a large group and a gymnast is holding a microphone for the announcers.

- are running side to side of the ield playing lacrosse trying to score.

- are in a field running around playing lacrosse.

Which do you think is the correct answer? Seriously, try it out first! (And no, I didn't mis-copy any rows...)

According to HellaSwag, Continuation #3 is the correct one! But...

- Continuation #3 contains a typo (“ield”). It's also ungrammatical (“are running side to side of the ield”). Do we really prefer language models that write bad English?

- The original prompt is error-ridden too (“People is around”).

- Continuation #1 is nonsensical (“watching int lacrosse”?) even without context.

- In real-world English, “men” have already been referred to in the sentence, and wouldn’t be referred to simply as “men” again (without a determiner like “the”) in the third sentence. So this would favor Continuation #2, even if it's a bit odd.

- What’s wrong with Continuation #4? It seems a better continuation than #3!

–

So how typical is this example in the HellaSwag dataset? Ignoring the question of whether it’s measuring anything useful, is it even accurate?

We analyzed the validation set, and found errors in 36% of its rows. (Methodology: we sampled 300 random rows, and asked Surgers if a row contained any of the following:

- One of the continuations seemed just as good or better than the proposed winner.

- At least one of the “wrong” continuations could be disqualified without even reading the initial scenario. We included this because one of the main goals of the HellaSwag dataset was explicitly to guard against artifacts like this.)

Surgers found errors in 107 of the examples. The “Activity Net” rows were particularly problematic. And while the “WikiHow” rows weren’t quite as egregious, their formatting and structure are rather unnatural.

Here are examples to illustrate.

HellaSwag Example #1

Man is in roofed gym weightlifting. Woman is walking behind the man watching the man. Woman

- is tightening balls on stand on front of weight bar.

- is lifting eights while he man sits to watch her.

- is doing mediocrity spinning on the floor.

- lift the weight lift man.

Problems:

- The prompt is poor English (“in roofed gym”) and confusing.

- The “correct” continuation (Continuation #2) is full of typos.

- Given the errors in the “correct” continuation, what makes the other continuations any less correct? For example, it’s plausible that the woman is spinning on the floor – even if “mediocrity spinning” doesn’t make any sense, is it any worse than “lifting eights while he man”?

HellaSwag Example #2

A large group of people appear at the starting point and being running through various different scenery's while many people are cheering them on. the people

- then go up the hill backwards at a rapid pace and pass through multiple obstacles and their coach still cheers them on during the event.

- all line up in a line and start running down the track and then they all do a cheer and run with one another.

- are now starting to approach the finish line and some have ribbons placed around their neck.

- try to get to a big goal by jumping up in the air and running very fast in the area as the camera captures them from several different angles.

Problems:

- The prompt itself is confusing and ungrammatical (“being running through various different scenery’s”).

- Continuation #3 is labeled as the correct one. But does it make more sense than the others? As written, it can imply that people have ribbons placed around their next as they're starting to approach the finish line, and not after.

- Continuation #1 is perfectly sensible if you imagine a fun race where people run backwards. Calling this wrong penalizes creativity – one of the key traits we want in storytelling models.

HellaSwag Example #3

After cooling the dough, she takes it out and cuts them into rectangular pieces. She places the raw cookie on a cookie sheet and bakes them. when the cookies

- are done, she takes a plate and runs them in water.

- are done baking, she places them in an airtight plastic container for storing for future consumption.

- are finished, white letters indicate that they are a gift gift from ms who is helping and sending them to the shop along with other gift and supplies.

- are done, she removes them into the oven.

Problems:

- The prompt is once again confusing and ungrammatical. (“she takes it out and cuts them”, “places the raw cookie on a cookie sheet and bakes them)

- Continuation #3 is ungrammatical (“a gift gift”, “along with other gift”), even without the scenario’s context.

- Continuation #4 is also ungrammatical (“removes them into”) on its own.

HellaSwag Example #4

[header] How to get good grades in economics [title] Develop an understanding of economic theories, history, and practice. [step] When starting to study economics, immerse yourself in existing academic literature on the topic. Browse your library's economics section.

- Try to find sections written by experts who are knowledgeable about the topic, and what they think is most pertinent to the topic. Alternatively, study a textbook to prepare your mind for a class in economics.

- Read newspapers like the financial times or magazines like the economist. Renowned economics blogs like economist's view or grasping reality are good sources for up-to-date information.

- Check online reviews of current books and articles from reputable sources. [substeps] As you practice economics, you may find that you are more engaged in learning the principles of economics in relation to how economics works.

- Over the course of a few days, read any new textbook by shakespeare. Subscribe to any of shakespeare's journals.

Problems:

- Continuation #2 is labeled as the correct one. But is Continuations #1 any less plausible, especially when Continuation #2 contains some capitalization errors?

HellaSwag Example #5

She blows out all of the smoke that she inhaled, she is laying in bed by herself relaxing. She seems like she is very bored just blowing smoke recording herself. turns out

- she is in lobotomized after that one eyeshadow song as she smokes all the smoke, then her mom gets up and comes out to smoke and help her smoke one more time.

- to be what she is looking for, for the video still has you boys doing some stuff with her and you are both seated together in the living room recording her and illustrating the music she's listening to.

- the digital item records her driving very well.

- , there is another person in the room with her feeding her, she smiles really big and continues exhaling smoke out.

Problems:

- The first continuation is nonsensical and ungrammatical by itself (“in lobotomized”, “eyeshadow song”), even without reading the scenario. But this dataset is supposed to be testing natural language inference, not grammaticality, and one of its express goals was to avoid artifacts like these.

- The fourth continuation is the “correct” one. But it doesn’t make much sense – what does it mean that someone is feeding her, if she’s smoking?

- The third continuation has a non-sequitur (“records her driving”), but is it any worse than the fourth continuation? Both are mostly plausible with some nonsensicalness thrown in.

If a popular benchmark contains large, obvious flaws like these, can we really trust that we’re training and measuring our models in the right way? If the field of AI wants to make sure models move beyond the lab into real world applications, we all need to take a harder, closer look at the data first.

Want to learn more about human evaluation, training large language models, and the not-so-hidden flaws in popular datasets? Check out our other blog posts on data quality, dataset building, and real-world human evaluation!